Agentic AI: The Next Evolution in Machine Learning

In recent years, AI has made tremendous strides — from rule-based systems to deep neural networks. But in 2025, something fundamentally new is happening: AI is beginning to act, not just predict. This new agentic AI paradigm means models now perceive their environment, set goals, and carry out multi-step tasks almost autonomously. Unlike a traditional ML model that passively outputs a prediction, an agentic system can observe inputs or data streams, reason about what to do next, and then execute actions (calling APIs, writing code, notifying users, etc.) with minimal human intervention. Under the hood, this shift is powered by large foundation models (like GPT-4, Claude, or open-source LLMs) paired with explicit memory systems, planners, and tool integrations. In this blog post, we’ll unpack what agentic AI is, how it differs from traditional ML, and explore examples of agentic workflows in action. Let’s dive in!

What is Agentic AI?

At its core, an AI agent is a system that perceives, decides, and acts in pursuit of a goal. Traditional AI systems typically function as predictors: you give them an input, and they produce an output in one shot. An agentic AI system, by contrast, is like an autonomous assistant. It actively observes inputs (text, data streams, API responses), maintains context or memory, and makes plans to achieve higher-level objectives.

For example, imagine an assistant that helps schedule your meetings. A conventional model might only generate a suggested time when you ask. An agentic version would notice your email requesting a meeting, recall your calendar preferences (from memory),check colleagues’ schedules via API, propose an optimized meeting time, send invites, and update your calendar—all on its own. In short, the system wraps a language model with extra components: a memory store, decision logic, and tool interfaces.

This shift isn’t just theoretical. With frameworks like LangChain, AutoGen, and others, developers can now chain models with search, code execution, and databases, effectively transforming LLMs from passive predictors into active agents. Agentic AI systems are beginning to power new kinds of applications: from chatbots that autonomously resolve customer tickets end-to-end to research assistants that gather data, analyze it, and draft reports with little oversight.

ML vs Agentic AI Traditional machine learning models are essentially stateless functions: input in, output out. You train a model on data, and it makes predictions or classifications when you query it. Each inference is independent,and the model doesn’t “remember” past interactions. In contrast, agentic AI is stateful and goal-driven. Here are some key differences:

- Workflow : Traditional ML is one-shot (e.g. classify this image, generate text for this prompt). Agentic AI engages in multi-step processes. It can take a series of actions (searching, computing, writing) until a goal is achieved.

- State & Memory : Standard models do not track context beyond a single query. Agentic systems maintain memory (via databases or vector stores) to recall previous data or decisions. This lets them build on past work, not start from scratch each time.

- Autonomy : Classic ML models require user prompts at every step. Agentic AI, on the other hand, operates with high autonomy. Once given an objective, it can decide when and how to act — calling tools or APIs without needing us to spell out each step.

- Purpose : The primary function of traditional AI is content generation or task automation under fixed rules. Agentic AI’s primary function is goal-oriented action and decision-making. It actively seeks to fulfil objectives rather

than just respond to commands.

In other words, traditional ML is like a passive worker following scripted instructions, whereas agentic AI is like an empowered collaborator that can think several steps ahead. This “proactive agent” model represents a significant evolution in how we build AI systems.

Core Components of Agentic Systems

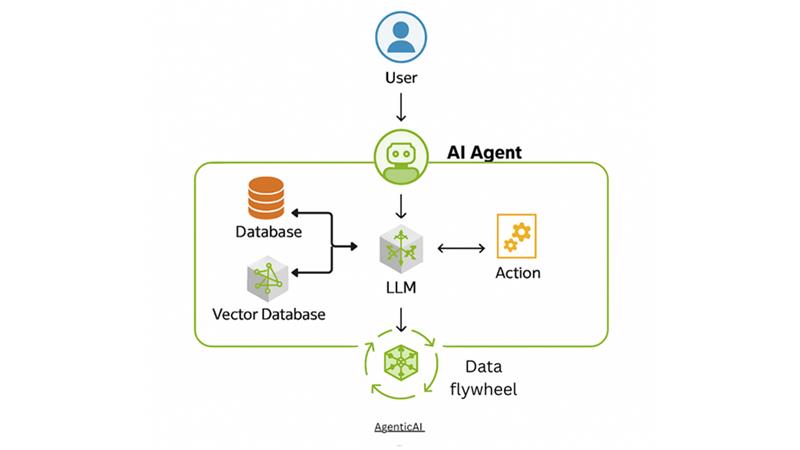

Creating an agentic AI involves combining several pieces around a foundational model. A typical agentic system includes:

- Foundation Model (LLM) : A powerful language model (GPT-4, Llama, etc.) that provides the core reasoning, language understanding, and generation capability.

- Memory & Context Store : A database or vector store to retain information (past interactions, documents, facts). This lets the agent look up relevant history and stay consistent over time, rather than treating each task in isolation.

- Retrieval-Augmented Generation (RAG) : A retrieval system that fetches up-to-date, contextually relevant information from external sources and incorporates it into the model’s prompts. RAG grounds the agent’s reasoning in real-world data, significantly reducing hallucinations and keeping outputs current

- Planner/Reasoner : A module or strategy that breaks down objectives into actionable steps. This might involve chain-of-thought prompting, search algorithms, or explicit planning frameworks. Reasoning and planning guide the agent’s sequence of actions toward the goal.

- Tool Integrations : Interfaces to external APIs and tools (like web search, code execution environments, databases, or custom services). Tool use is a defining feature of agentic AI—it bridges the gap between language and action. For example, an agent might use a calculator API, a web browser, or internal business systems to perform tasks.

- Orchestration & Guardrails : Supervisory logic that coordinates the components above and ensures safe operation. This includes chaining multiple models, managing workflows, and applying safeguards (e.g. checks on outputs) so the agent stays on track and avoids mistakes.

By combining these elements, an agent can observe new data, remember relevant context, plan a response, and then execute it in the world. For instance, an agent might recall earlier project notes from its memory, use the LLM to devise a plan of action, call external APIs to gather data, and finally generate a report or command based on that information. This modular architecture abstracts away much of the manual coding: engineers can focus on defining goals and workflows rather than coding each step by hand.

How Agentic AI Works: The Four-Stage Cycle

Beyond its architecture, agentic AI follows a repeatable four-stage operational loop that makes it dynamic and adaptive:

- Observe : The agent perceives new input (from a user, environment, API, etc.). This could be an email, a dataset, an anomaly alert, or any other signal.

- Analyze : The agent reasons about the situation. Using memory, rules, or its internal planner, it determines possible courses of action.

- Act : It executes one or more actions to move toward its goal. This could involve calling an external tool, modifying data, sending a notification, or triggering another agent.

- Learn (Data Flywheel Activation) : Post-action, the agent collects feedback and outcomes, integrating this new data to refine its models and strategies. This feedback loop ensures that the agent becomes increasingly effective over time.

This cycle allows agents to adapt over time,optimize their behavior, and become increasingly effective without constant human guidance. Each loop tightens their alignment with goals, making them smarter with experienc —a foundational leap beyond static ML models.

Real-World Agentic Applications

Agentic AI is already powering innovative solutions in various domains. For example, imagine an agent that constantly monitors data pipelines for anomalies, or an intelligent assistant that autonomously reviews code changes. Below are two illustrative scenarios:

- Data Pipeline Monitoring Agent : This agent continuously watches a data pipeline’s health (job logs, data quality metrics, etc.). When it detects an issue (say, a failed job or unexpected data distribution), it analyzes the situation: “Why did this fail?” It might consult past incident records (memory) and documentation, then decide on a fix. The agent could automatically restart jobs, reroute data, or adjust parameters via system APIs. It can even draft an alert message summarizing the issue. After each incident, the outcome is logged in memory so the agent improves over time. In short, the pipeline agent observes, diagnoses, acts, and learns—all without a human manually inspecting each alert.

- Customer Support Resolution Agent : This agent acts as an intelligent helpdesk assistant that autonomously resolves customer issues end-to-end. When a user submits a support ticket, the agent reads the query, retrieves relevant past tickets or documentation from its memory, and categorizes the problem. If it’s a known issue (e.g., login failures, billing errors), the agent drafts a personalized response and triggers backend actions—like resetting a password or issuing a refund—via API. If the issue is new, it escalates it with a detailed internal note summarizing the context and previous troubleshooting steps. Over time, it learns to handle more cases independently as it builds a knowledge base from resolved tickets. This reduces human workload while improving response speed and consistency.

These examples illustrate the typical agentic workflow: Observe → Analyze → Act → Learn. The agentic pipeline monitors data, runs diagnostics, and then remediates issues; the code-review agent reads code, reasons through logic, and responds with actions (comments or code changes). In each case, the agent uses its LLM brain plus tools (logging systems, compilers, web APIs) to move the process forward autonomously.

Conclusion & Call to Action

Agentic AI represents an exciting new chapter for machine learning. By wrapping powerful models with memory, reasoning, and tool-use, we can build systems that go beyond static predictions to become proactive collaborators. For ML engineers and enthusiasts, this means thinking bigger: instead of tuning a model for accuracy, you can design agents to solve whole problems end-to-end.

What could you build with an AI agent at your disposal? We’d love to find out. If you’re experimenting with agentic architectures or have ideas for new agent-driven workflows, share your experiences! Join the conversation in the comments, show off your projects on social media, or write about your experiments. Together, we’ll shape the future of autonomous AI and push the boundaries of what machine learning can do.

From Chaos to Clarity: The Ultimate Guide to Automating Financial Reports with VBA

From Chaos to Clarity: The Ultimate Guide to Automating Financial Reports with VBA

Did You Know You Can Launch an MVP in 30 Days? Here's How!

Did You Know You Can Launch an MVP in 30 Days? Here's How!

Mastering Business Intelligence Dashboards: Excel Techniques You Need to Know

Mastering Business Intelligence Dashboards: Excel Techniques You Need to Know

Turning Excel into a Scalable Business Tool: A Step-by-Step Guide

Turning Excel into a Scalable Business Tool: A Step-by-Step Guide

The Psychology Behind Intuitive UX: How to Design for User Comfort

The Psychology Behind Intuitive UX: How to Design for User Comfort

What Makes a Good MVP? Essential Tips for First-Time Founders

What Makes a Good MVP? Essential Tips for First-Time Founders

How to Increase User Retention with Game Mechanics in Your App

How to Increase User Retention with Game Mechanics in Your App

Excel Automation for Non-Technical Teams: A Beginner's Guide

Excel Automation for Non-Technical Teams: A Beginner's Guide

How AI Is Transforming ERP Systems for SMEs

How AI Is Transforming ERP Systems for SMEs

Why UX Is the Silent Salesperson in Every App

Why UX Is the Silent Salesperson in Every App